-

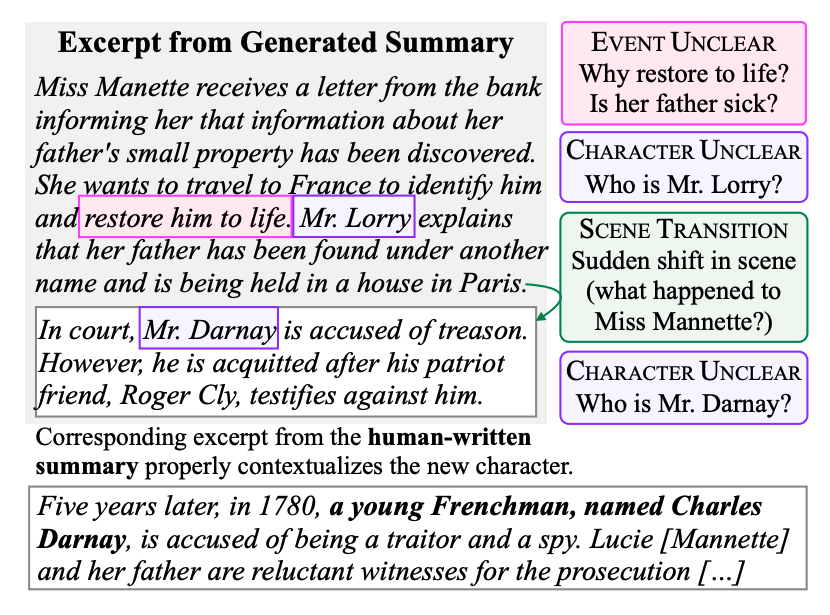

SNaC: Coherence Error Detection for Narrative Summarization

SNaC: Coherence Error Detection for Narrative Summarization

Tanya Goyal, Junyi Jessy Li, and Greg Durrett. Oral Session 5 (Friday), Virtual talk page

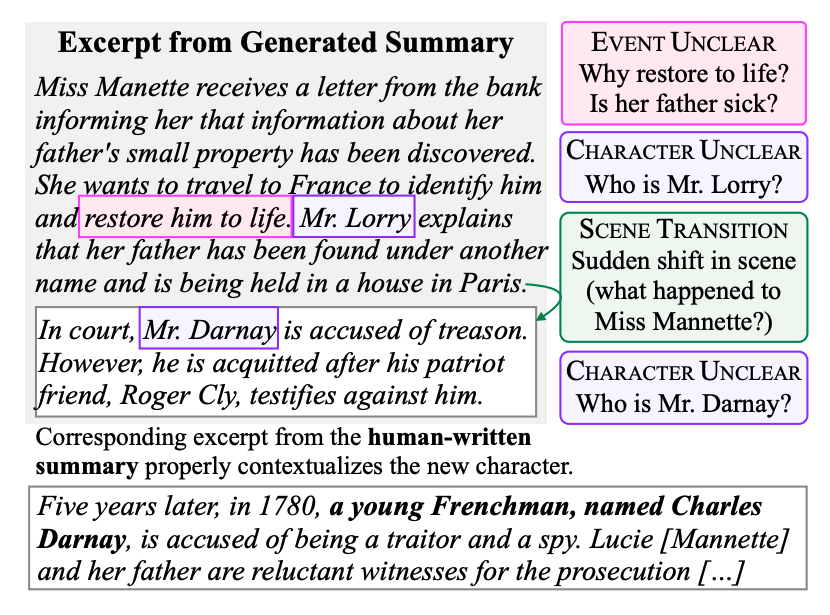

We annotate coherence errors in strong systems for narrative summarization, including GPT-3 tuned with human feedback and a BART model.

Such errors are still a first-order challenge in these models, and we show that we can train models to detect them.

Also check out Tanya's other paper in the same session, HydraSum! Tanya is on the academic job market!

-

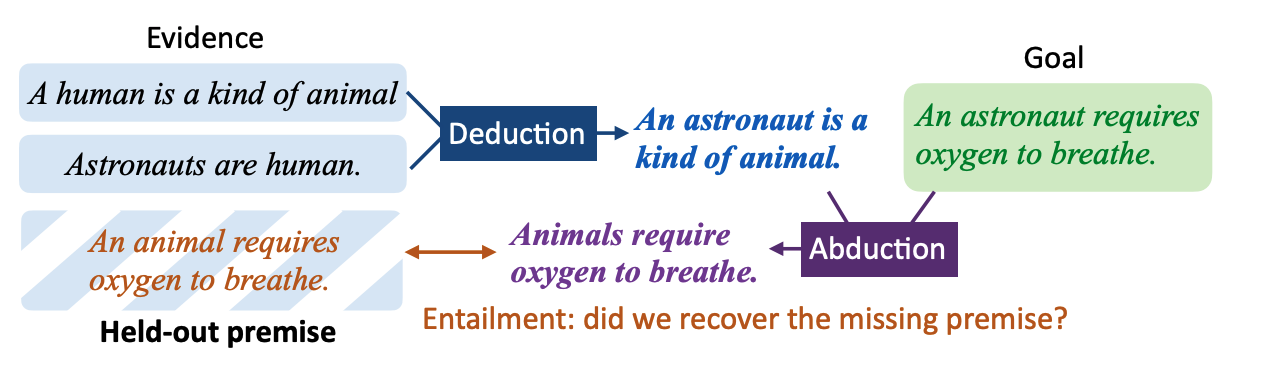

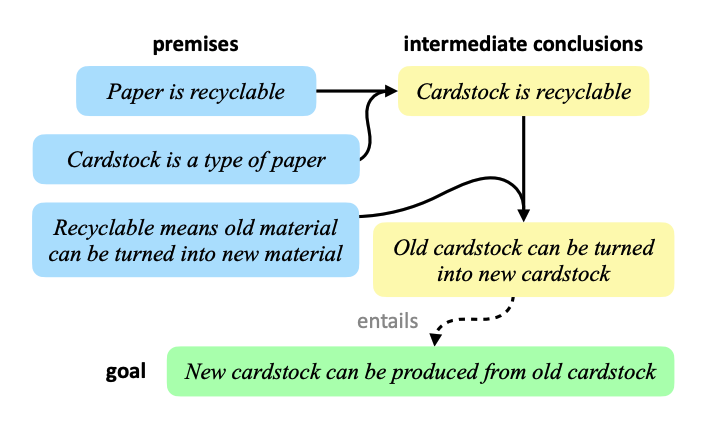

Natural Language Deduction with Incomplete Information

Natural Language Deduction with Incomplete Information

Zayne Sprague, Kaj Bostrom, Swarat Chaudhuri, and Greg Durrett. Poster Session 2 (Friday), Virtual talk page

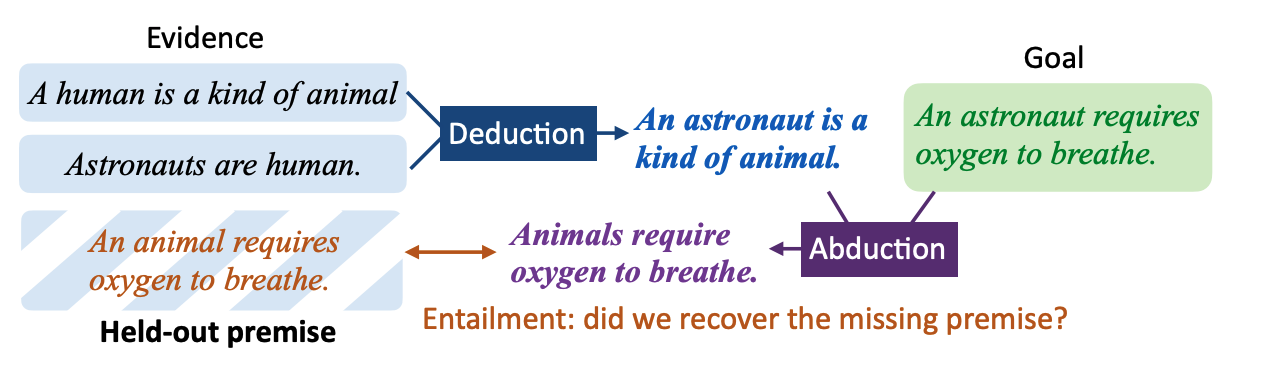

When we want to build natural language proofs of claims given premises, not all premises may be explicitly stated in practice.

We combine deductive and abductive reasoning to build such proofs while generating such statements that would be needed for the proof to be correct.

This is an extension of the SCSearch system presented in our EMNLP22 Findings paper (see below).

Zayne is applying for PhDs this year!

-

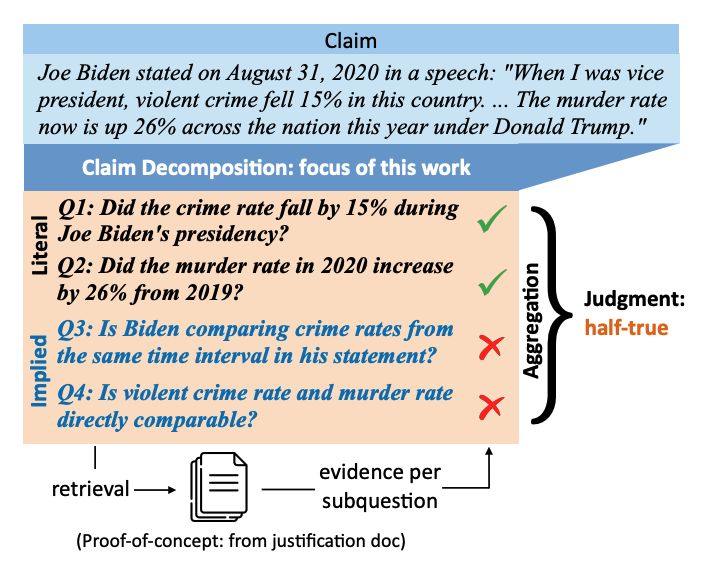

Generating Literal and Implied Subquestions to Fact-check Complex Claims

Generating Literal and Implied Subquestions to Fact-check Complex Claims

Jifan Chen, Aniruddh Sriram, Eunsol Choi, and Greg Durrett. Poster Session 13+14 (Sunday), Virtual talk page

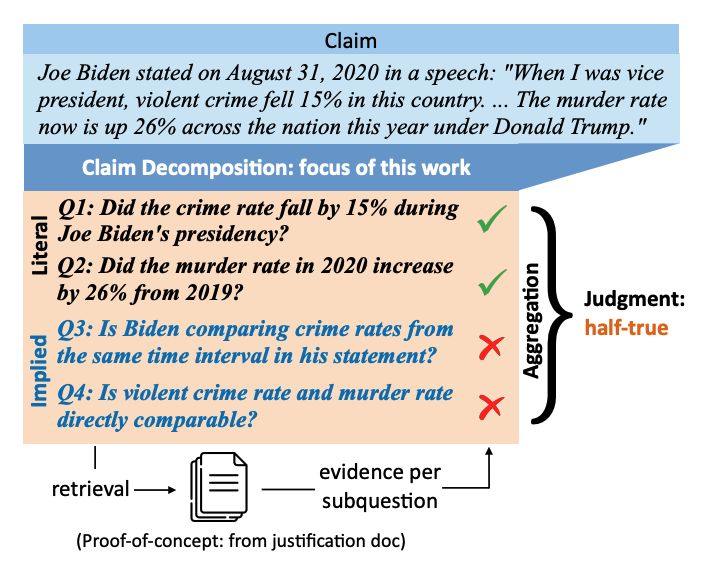

We show how complex political claims from PolitiFact can be decomposed into a series of yes-no questions whose answers help determine the veracity of the claim.

We annotate a dataset of claims from PolitiFact and train generation models to automate the decomposition task.

-

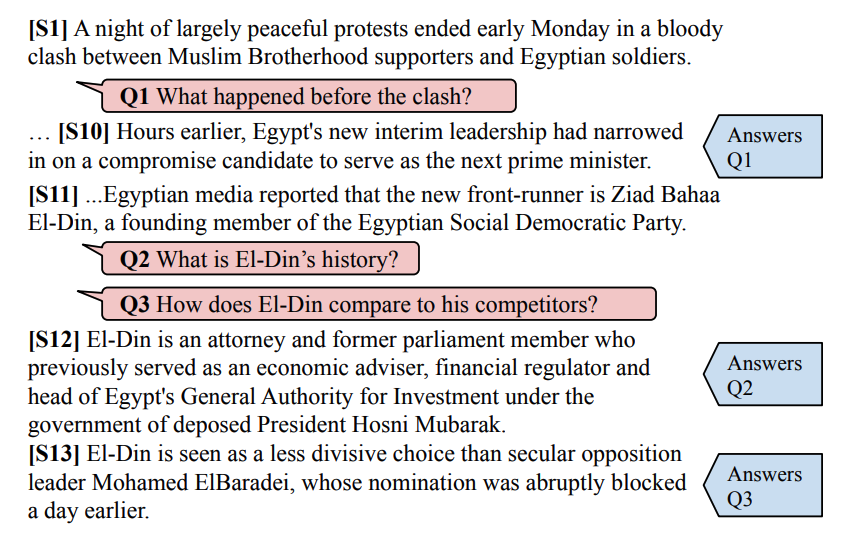

Discourse Comprehension: A Question Answering Framework to Represent Sentence Connections

Discourse Comprehension: A Question Answering Framework to Represent Sentence Connections

Wei-Jen Ko, Cutter Dalton, Mark Simmons, Eliza Fisher, Greg Durrett, and Junyi Jessy Li. Poster Session 7 (Saturday), Virtual talk page

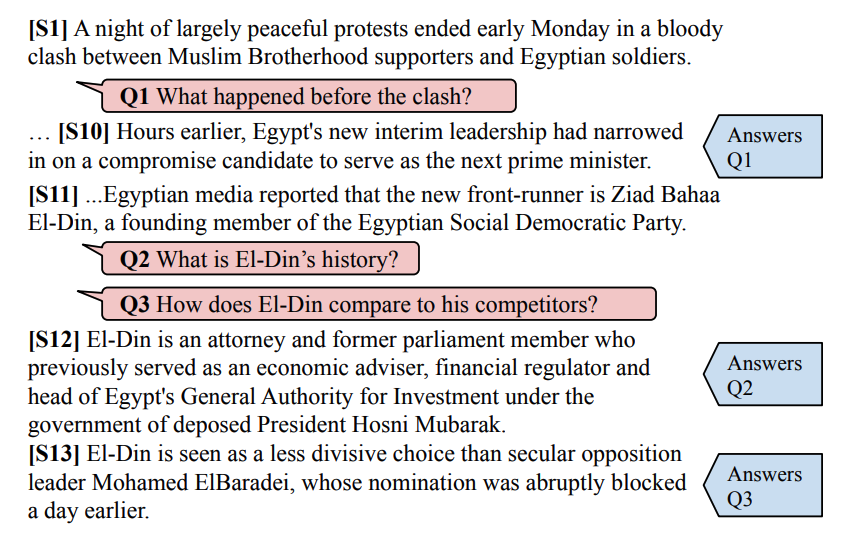

We release a dataset of questions annotated so that they are answered by sentences in news articles. These questions are designed to arise

from earlier "anchored" sentences and express a discourse relation between an anchor sentence and the answer sentence.

-

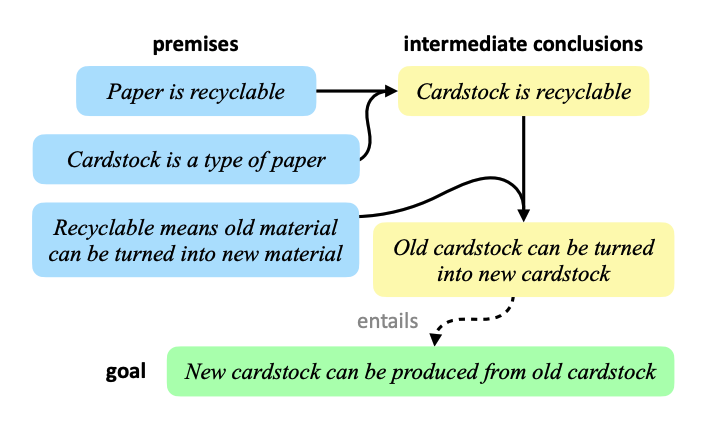

[Findings] Natural Language Deduction through Search over Statement Compositions

[Findings] Natural Language Deduction through Search over Statement Compositions

Kaj Bostrom, Zayne Sprague, Swarat Chaudhuri, and Greg Durrett. Virtual talk page

We propose a system to repeatedly generate natural language statements that are entailed by a collection of premises. By doing so, we can construct

natural language "proofs" of hypotheses and do entailment in an explainable way.

-

[Demo] FALTE: A Toolkit for Fine-grained Annotation for Long Text Evaluation

Tanya Goyal, Junyi Jessy Li, and Greg Durrett. Virtual talk page

Demo of the system used to collect our dataset for SNaC.

SNaC: Coherence Error Detection for Narrative Summarization

SNaC: Coherence Error Detection for Narrative Summarization Natural Language Deduction with Incomplete Information

Natural Language Deduction with Incomplete Information Generating Literal and Implied Subquestions to Fact-check Complex Claims

Generating Literal and Implied Subquestions to Fact-check Complex Claims Discourse Comprehension: A Question Answering Framework to Represent Sentence Connections

Discourse Comprehension: A Question Answering Framework to Represent Sentence Connections [Findings] Natural Language Deduction through Search over Statement Compositions

[Findings] Natural Language Deduction through Search over Statement Compositions