-

Flexible Generation of Natural Language Deductions

Flexible Generation of Natural Language Deductions

Kaj Bostrom, Xinyu Zhao, Swarat Chaudhuri, and Greg Durrett. Virtual Poster Session 2 (Monday 12:30pm AST). Talk page

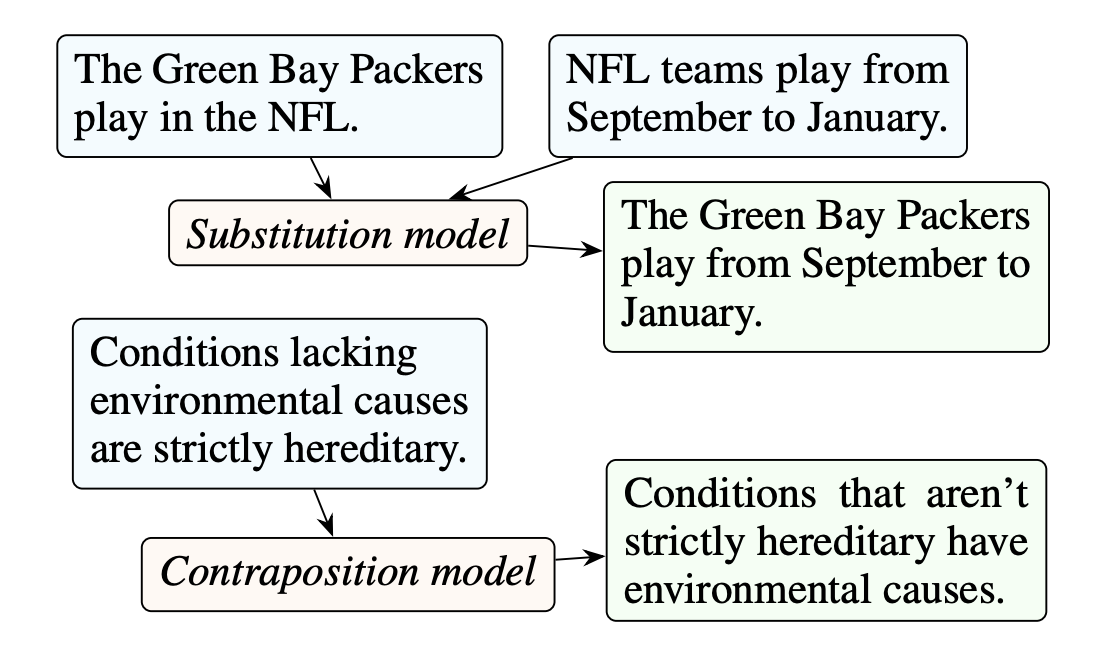

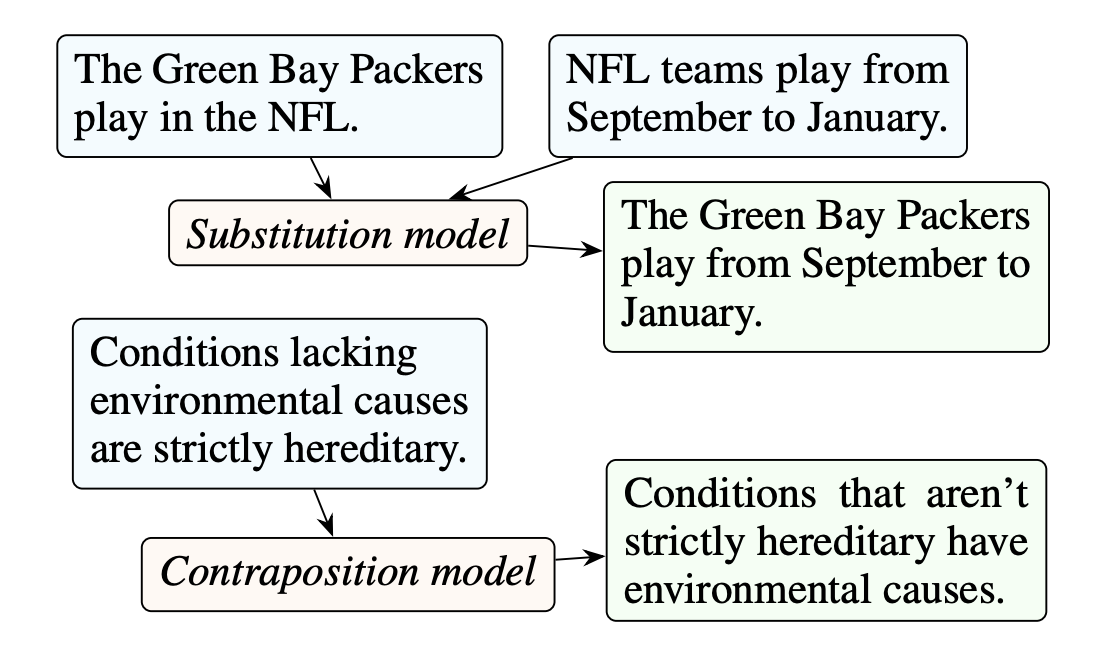

We build a model to generate valid deductions in natural language: statements that represent useful logical inferences from a handful of input premises. This model is a crucial building block for systems that can do long-horizon, explainable reasoning in natural language; come talk to us about our goals with this!

-

Connecting Attributions and QA Model Behavior on Realistic Counterfactuals

Connecting Attributions and QA Model Behavior on Realistic Counterfactuals

Xi Ye, Rohan Nair, and Greg Durrett. Virtual Poster Session 2 (Monday 12:30pm AST). Talk page

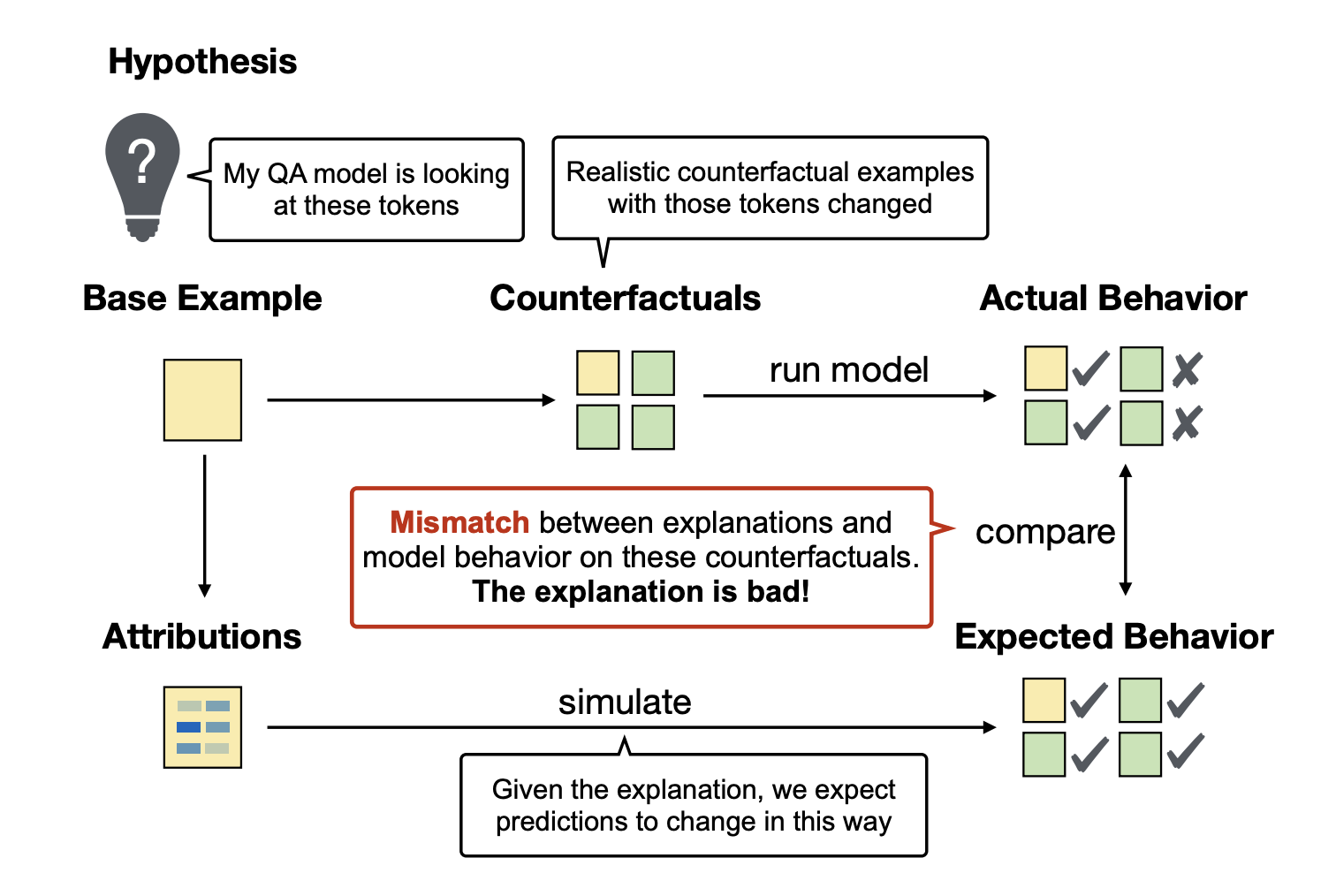

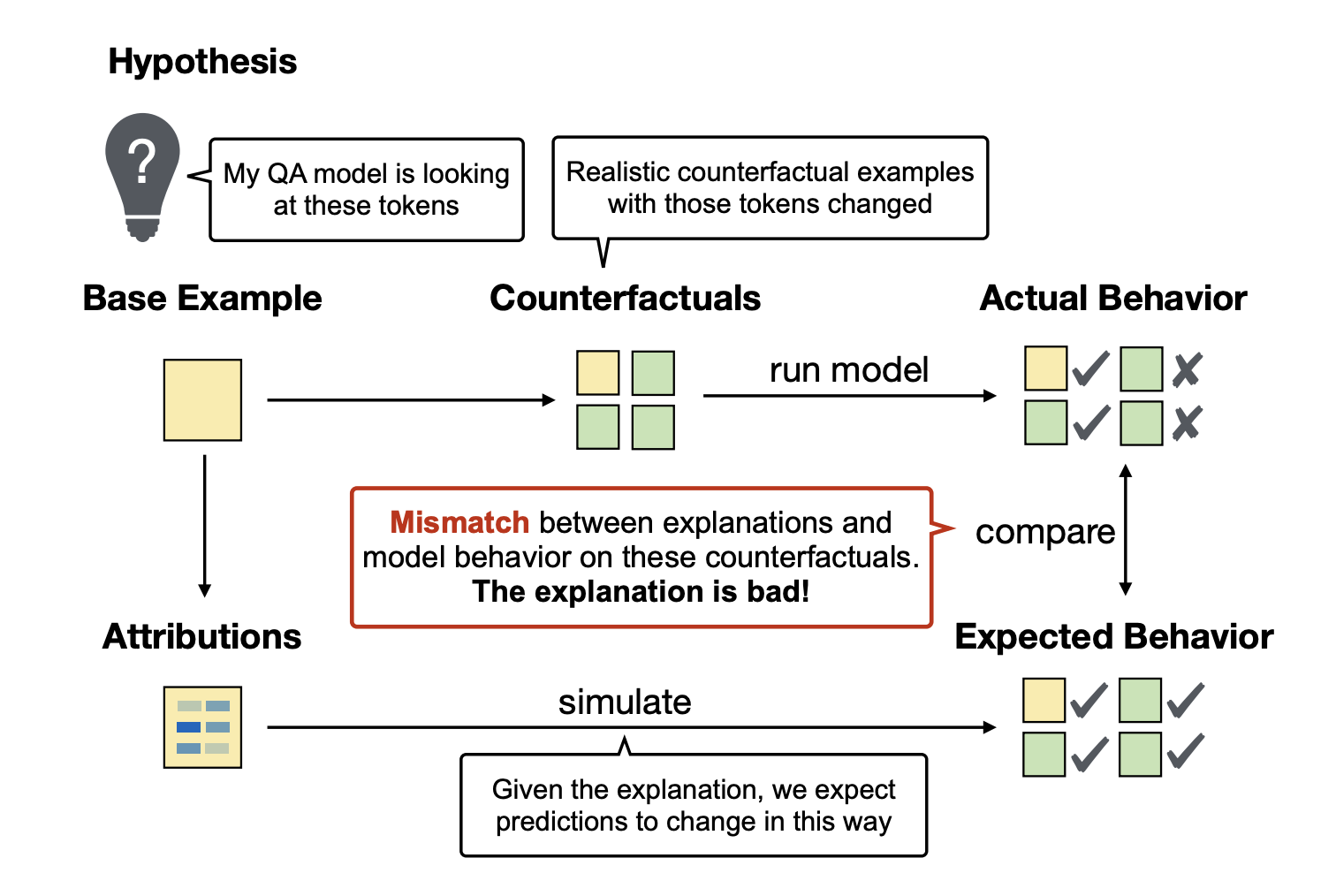

Explanations should tell us about how models behave on counterfactuals; if the input were different in some way, how would the prediction change? But which counterfactuals? We present a way to analyze the validity of explanations for reasoning about a set of realistic counterfactuals, with a focus on QA. Commonly used attribution techniques fall short on this measure of validity, but we show some results suggesting a class of better techniques that might improve things!

-

[Findings] Can NLI Models Verify QA Systems' Predictions?

[Findings] Can NLI Models Verify QA Systems' Predictions?

Jifan Chen, Eunsol Choi, and Greg Durrett. To be presented at MRQA

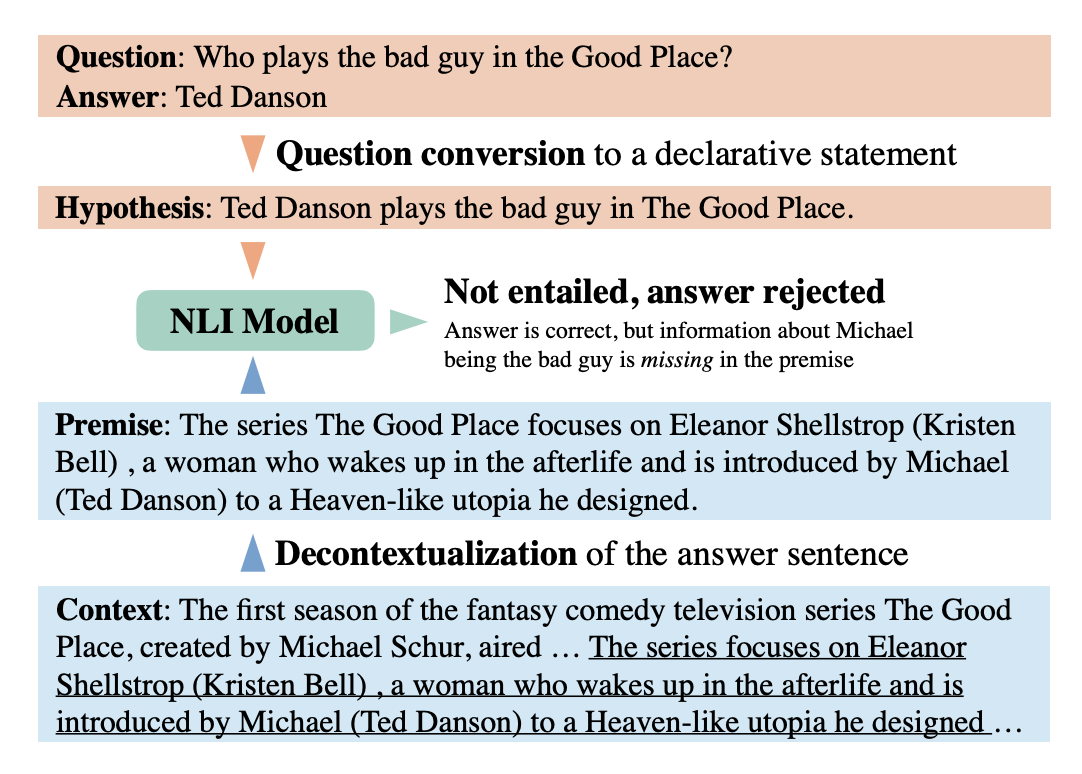

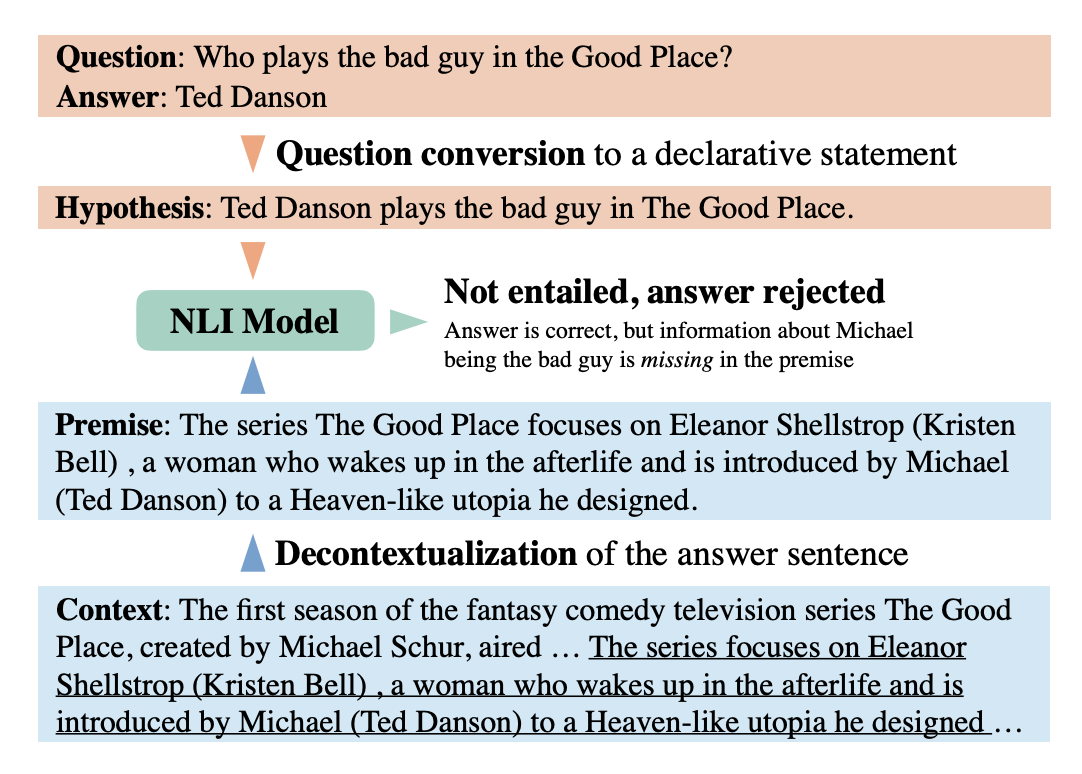

A combination of existing models for manipulating questions and passages in natural language can enable off-the-shelf NLI models to check the work of QA systems. We show that this can improve confidence in our systems and even in our datasets through an auditing process.

-

[Findings] Optimal Neural Program Synthesis from Multimodal Specifications

[Findings] Optimal Neural Program Synthesis from Multimodal Specifications

Xi Ye, Qiaochu Chen, Isil Dillig, and Greg Durrett.

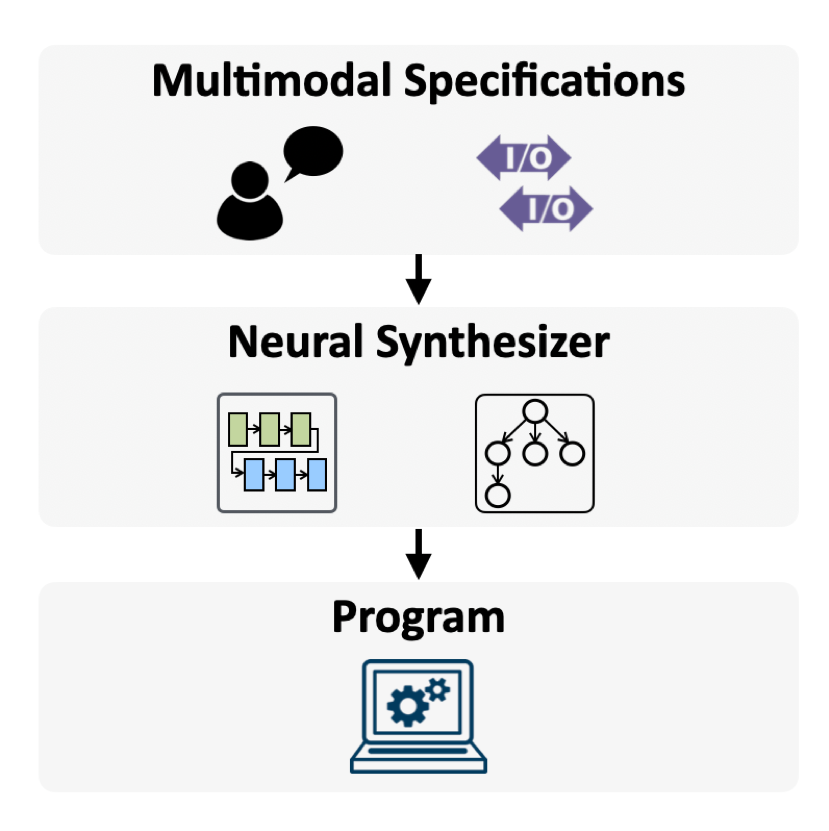

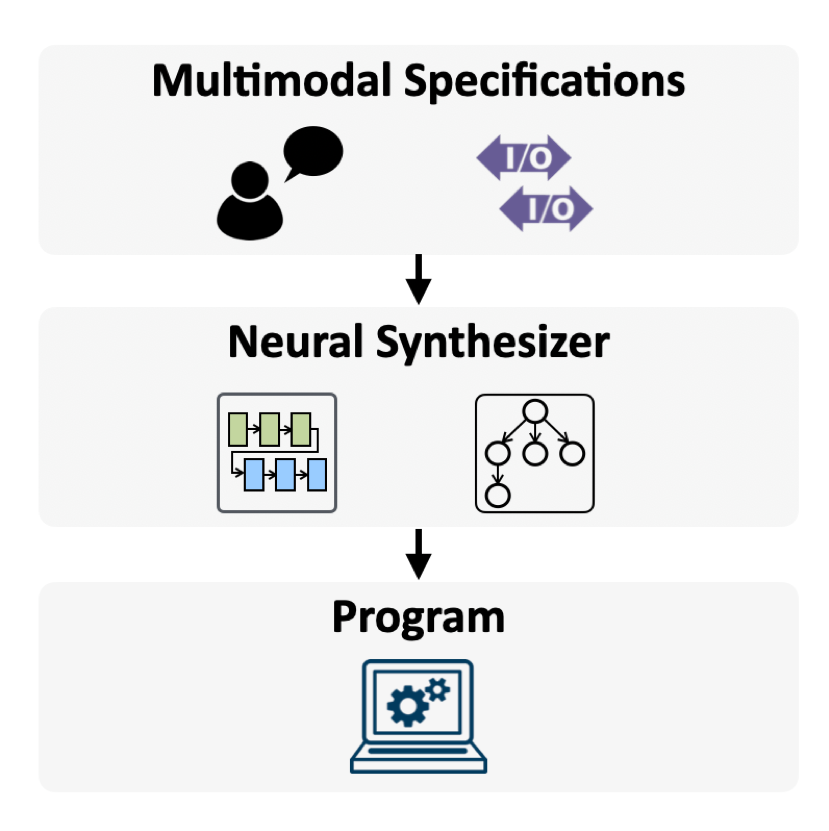

We present a neural program synthesis model that takes natural language and examples as input. Unlike other language-to-code models, we use sophisticated pruning techniques based on program semantics to guide the search and find the optimal program according to the model. We show strong improvements on a regex synthesis task from our prior work.

Flexible Generation of Natural Language Deductions

Flexible Generation of Natural Language Deductions Connecting Attributions and QA Model Behavior on Realistic Counterfactuals

Connecting Attributions and QA Model Behavior on Realistic Counterfactuals [Findings] Can NLI Models Verify QA Systems' Predictions?

[Findings] Can NLI Models Verify QA Systems' Predictions? [Findings] Optimal Neural Program Synthesis from Multimodal Specifications

[Findings] Optimal Neural Program Synthesis from Multimodal Specifications